hello @VirtuBox

I just created a new server and migrate the sites to it.

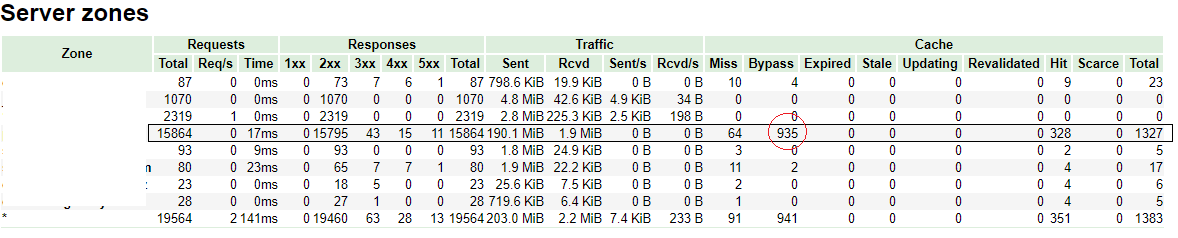

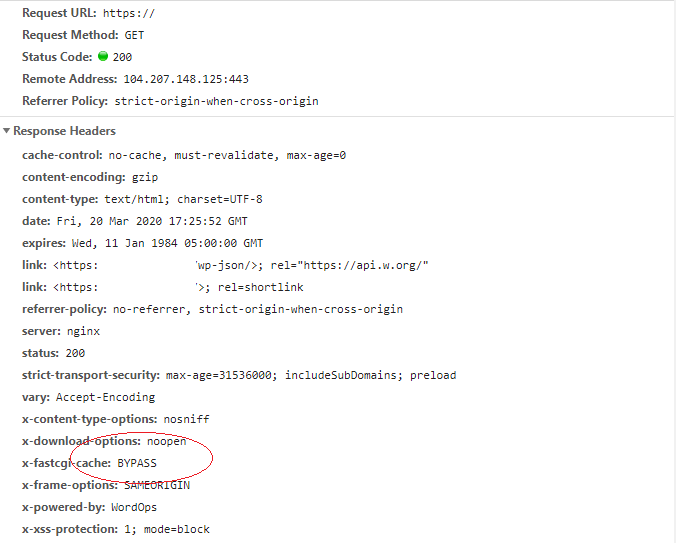

analyzing the site that has traffic I realized that it has a high result of cache = bypass

using the command curl: (domain changed)

curl -sLI domain.tld

HTTP / 1.1 301 Moved Permanently

Server: nginx

Date: Fri, 20 Mar 2020 17:28:02 GMT

Content-Type: text / html

Content-Length: 162

Connection: keep-alive

Location: https: // domain /

X-Powered-By: WordOps

X-Frame-Options: SAMEORIGIN

X-Xss-Protection: 1; mode = block

X-Content-Type-Options: nosniff

Referrer-Policy: no-referrer, strict-origin-when-cross-origin

X-Download-Options: noopen

HTTP / 2 200

server: nginx

date: Fri, 20 Mar 2020 17:28:02 GMT

content-type: text / html; charset = UTF-8

vary: Accept-Encoding

link: <https: // domain / wp-json />; rel = "https://api.w.org/"

link: <https: // domain />; rel = shortlink

x-powered-by: WordOps

x-frame-options: SAMEORIGIN

x-xss-protection: 1; mode = block

x-content-type-options: nosniff

referrer-policy: no-referrer, strict-origin-when-cross-origin

x-download-options: noopen

strict-transport-security: max-age = 31536000; includeSubDomains; preload

x-fastcgi-cache: MISS

images of google chrome web master and nginx vts:

# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 18.04.4 LTS

Release: 18.04

Codename: bionic

# wo info

NGINX (1.16.1 ):

user www-data

worker_processes auto

worker_connections 50000

keepalive_timeout 8

fastcgi_read_timeout 300

client_max_body_size 100m

allow 127.0.0.1 ::1

PHP (7.2.28-3):

user

expose_php Off

memory_limit 128M

post_max_size 100M

upload_max_filesize 100M

max_execution_time 300

Information about www.conf

ping.path /ping

pm.status_path /status

process_manager ondemand

pm.max_requests 1500

pm.max_children 50

pm.start_servers 10

pm.min_spare_servers 5

pm.max_spare_servers 15

request_terminate_timeout 300

xdebug.profiler_enable_trigger off

listen php72-fpm.sock

Information about debug.conf

ping.path /ping

pm.status_path /status

process_manager ondemand

pm.max_requests 1500

pm.max_children 50

pm.start_servers 10

pm.min_spare_servers 5

pm.max_spare_servers 15

request_terminate_timeout 300

xdebug.profiler_enable_trigger on

listen 127.0.0.1:9172

PHP (7.3.15-3):

user

expose_php Off

memory_limit 128M

post_max_size 100M

upload_max_filesize 100M

max_execution_time 300

Information about www.conf

ping.path /ping

pm.status_path /status

process_manager ondemand

pm.max_requests 1500

pm.max_children 50

pm.start_servers 10

pm.min_spare_servers 5

pm.max_spare_servers 15

request_terminate_timeout 300

xdebug.profiler_enable_trigger off

listen php73-fpm.sock

Information about debug.conf

ping.path /ping

pm.status_path /status

process_manager ondemand

pm.max_requests 1500

pm.max_children 50

pm.start_servers 10

pm.min_spare_servers 5

pm.max_spare_servers 15

request_terminate_timeout 300

xdebug.profiler_enable_trigger on

listen 127.0.0.1:9173

MySQL (10.3.22-MariaDB) on localhost:

port 3306

wait_timeout 60

interactive_timeout 28800

max_used_connections 6

datadir /var/lib/mysql/

socket /var/run/mysqld/mysqld.sock

my.cnf [PATH] /etc/mysql/conf.d/my.cnf

# wo -v

WordOps v3.11.4

Copyright (c) 2019 WordOps.